A New Frontier in The Intersection of Gen-AI and Data Privacy

Brace yourselves, folks. The AI revolution is crashing into our lives like a wrecking ball. In this era of unprecedented technological evolution, generative AI (Gen-AI) has become an inescapable force, penetrating every facet of our existence—from information consumption to work and entertainment.

Trying to evade AI these days is like outrunning your shadow. It’s futile. Who knows where it’ll take us next? One thing’s certain – it will be one thrilling, unpredictable adventure!

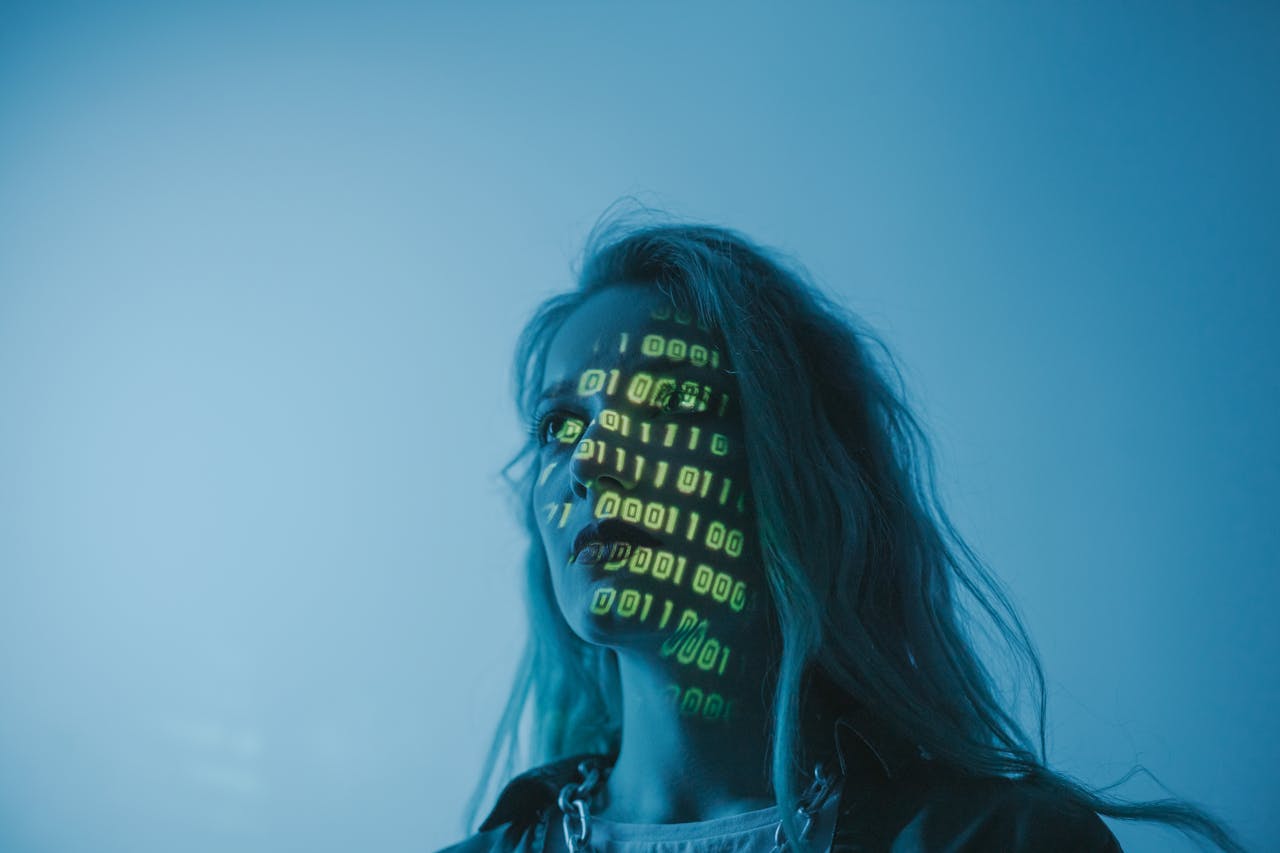

The evolution of Gen-AI also brings unforeseen challenges to data privacy.

For instance, AI-generated images or ‘deep fakes’ may lead to more advanced methods of bypassing security safeguards or deceiving consumers into giving up their most private information. Thus, this intersection of Gen-AI and data privacy places us in a critical and tenuous juncture with privacy and safety.

However, the opposite is also true—if AI can mutate data privacy threats, it has massive potential to strengthen our safeguards against cyber criminals. The deeper our conversations about AI go, the clearer it becomes that AI has the potential to revolutionise our data privacy practices in remarkable ways.

So, this paradigm shift in AI usage isn’t just about protecting existing data—it’s also about empowering us to harness data’s potential while fiercely safeguarding individual privacy rights.

Moving from Reactive to Proactive Privacy

The data protection we know has always been quite reactive, focusing on mitigating risks only after breaches occur.

However, the surge in Gen-AI demands a shift to proactive privacy engineering. This approach embeds privacy principles into AI systems from their inception rather than as an afterthought.

Now, we can integrate advanced safeguards like differential privacy, federated learning, and homomorphic encryption into AI models by adopting privacy-by-design methodologies. These techniques ensure that consumer data remains protected, even as it drives innovation.

The great thing about proactive privacy engineering is that it strengthens our defences and cultivates a culture of accountability and responsibility within the AI ecosystem.

Navigating the Ethical Landscape

Enter the blurred lines! Gen-AI’s obscurity between reality and simulation presents complex ethical challenges in data protection. For instance, we now have AI-generated speech synthesis and even AI-generated fashion models.

While synthetic data offers promising privacy solutions, its widespread use raises concerns about accountability and transparency. Addressing these challenges requires embracing ethical AI frameworks prioritising human values and dignity.

This is precisely why we at Enprivacy stress the need for clear guidelines for generating and using synthetic data. These guidelines must be established to ensure adherence to fairness, transparency, and consent principles. Integrating ethics into Gen-AI’s core can lead to responsible innovation that prioritises individual well-being.

The good news is that Singapore is looking to take the lead in AI governance and ethical AI usage to protect interests while still facilitating innovation. Singapore’s model AI Governance Framework contains eleven AI ethics frameworks, which include accountability, human agency, and oversight.

The Era of Data Democracy

Data sovereignty is taking centre stage in this Gen-AI revolution, redefining how we approach data protection. Gone are the days when centralised models held our personal information hostage, raising concerns about monopolies and exploitation. Gen-AI offers a democratising force, empowering individuals to reclaim ownership and control over their data.

Imagine user-friendly interfaces and applications tailored for even the most tech-averse among us, shielding them from phishing attacks and data breaches that have long plagued the digitally illiterate. Through decentralised data governance and blockchain technologies, you become the gatekeeper of your personal information, dictating how, when, and with whom it’s shared.

This shift from centralised authorities to distributed networks fosters a more equitable data ecosystem, where privacy is a fundamental right, not a privilege reserved for the elite.

Cultivating Digital Empowerment

Revolutionising data protection in the Gen-AI era revolves around cultivating a culture of digital empowerment. It means enabling individuals to take charge of their digital identities, make informed decisions about their data, and actively shape AI’s future.

This task requires investing in digital literacy programmes that educate people about Gen-AI’s impact on data privacy and security. It also fosters collaborations between industry, academia, and civil society to co-create solutions prioritising human-centric values.

Then, what can your business do on an organisational level?

You might encourage your employees to enrol in any of these courses. For instance, Singapore Management University’s Advanced Certificate in Generative AI, Ethics and Data Protection covers the ethical and data protection implications of Gen-AI. Short-term technical courses such as these empower individuals to protect their respective organisations from Gen-AI-created threats and harness AI to educate themselves on mutating privacy threats and protect their own data.

Responsible Innovation through Using AI To Safeguard Consumer Interests

As we stand on the brink of a Gen-AI-defined era, revolutionising data protection is more urgent than ever.

By embracing proactive privacy engineering, navigating ethical complexities, democratising data sovereignty, and cultivating digital empowerment, we can pave the way for responsible innovation that protects individual privacy and dignity.

The most profound insights in this journey lie beyond the obvious. It’s about reimagining our relationship with technology and forging a future where privacy is not an afterthought but a guiding principle in the age of Gen-AI.

LATEST POSTS

Navigating the Generative AI Adoption Landscape while Balancing Innovation and Risk

As the digital world changes lightning-f.... Read more

The Ashley Madison Hack, A Stark Reminder of Data Privacy's Crucial Importance

Netflix viewers now have the opportunity.... Read more

A Bold Quest to Revolutionise Data Privacy From Big Tech to Startup

In the shadowy corners of the digital wo.... Read more

The 5 Biggest Data Privacy Mistakes That Companies Make

Data privacy can make or break an organi.... Read more

5 Reasons Why Your Business Needs a Data Privacy Programme

Data is the lifeblood of a business. .... Read more

Your One-Stop Guide To Understanding Data Privacy Compliance

In today’s world of website scrolling .... Read more